|

the application of mathematical and statistical methods to books and other media of communicationAt the same time, Nalimov and Mulchenko defined scientometrics as:

the application of those quantitative methods which are dealing with the analysis of science viewed as an information processAccording to these definitions, scientometrics is restricted to science communication, whereas bibliometrics is designed to deal with more general information processes. Nowadays, the borderlines between the two specialities almost vanished and both terms are used almost as synonyms. The statistical analysis of scientific literature began years before the term bibliometrics was coined. The main contributions are: Lotka's Law of scientific productivity, Bradford's Law of scatter, and Zipf's Law of word occurrence. In 1926, Alfred J. Lotka published a study on the frequency distribution of scientific productivity determined from a decennial index of Chemical Abstracts [4] (see Table 1). Lotka concluded that:

In a given field, the number of authors making n contributions is about 1/n2 of those making one.Lotka's Law means that few authors contribute most of the papers and many or most of them contribute few publications. For instance, in the original data of Lotka's study illustrated in Table 1, the most prolific 1350 authors (21% of the total) wrote more than half of the papers (6429 papers, 51% of the total).

| papers | observed authors | expected authors | total papers |

| 1 | 3991 | 3991 | 3991 |

| 2 | 1059 | 998 | 2118 |

| 3 | 493 | 443 | 1473 |

| 4 | 287 | 249 | 1148 |

| 5 | 184 | 160 | 920 |

| 6 | 131 | 111 | 786 |

| 7 | 85 | 81 | 595 |

| 8 | 64 | 62 | 512 |

| 9 | 65 | 49 | 585 |

| 10 | 41 | 40 | 410 |

| total | 6400 | 6890 | 12538 |

If scientific journals are arranged in order of decreasing productivity on a given subject, they can be divided into groups of different sizes (number of journals) each containing the same number of papers relevant to the subject. The size of each group (except the first) is given by the size of the previous group multiplied by a constant.Bradford formulated his law after studying a bibliography of geophysics. Journals can be divided in 3 groups of different sizes but containing about the same number of relevant papers: a core group containing 2 journals and 179 relevant papers, a second group containing 4 journals and 185 relevant papers, and a third group containing 11 journals and 186 relevant papers:

In relatively lengthy texts, if words occurring within the text are listed in order of decreasing frequency, then the rank of a word on that list multiplied by its frequency will equal a constant, which depends on the analyzed text.

| rank | frequency | rank * frequency |

| 10 | 2653 | 26530 |

| 20 | 1311 | 26220 |

| 30 | 926 | 27780 |

| 40 | 717 | 28680 |

| 50 | 556 | 27800 |

| 100 | 265 | 26500 |

| 200 | 133 | 26600 |

| 300 | 84 | 25200 |

| 400 | 62 | 24800 |

| 500 | 50 | 25000 |

| 1000 | 26 | 26000 |

| 2000 | 12 | 24000 |

| 3000 | 8 | 24000 |

| 4000 | 6 | 24000 |

| 5000 | 5 | 25000 |

| 10000 | 2 | 20000 |

| 20000 | 1 | 20000 |

| 29899 | 1 | 29899 |

Each individual will adopt a course of action that will involve the expenditure of the probably least average of his work.According to Zipf, if the Principle of Least Effort works, the speaker (or writer) tends to minimize number and length of words (this he calls the Force of Unification), by overloading the same word with different meanings, while the hearer (or reader) calls for a diversification of words (this he calls the Force of Diversification), by assigning different meanings to different words. For communication to be effective, these opposite forces need to equilibrate, giving rise to the mentioned law of word occurrence. Only in the beginning of the 1980's, with the fast development of computer science, bibliometrics could evolve into a distinct scientific discipline with a specific research profile and corresponding communication structures. Scientometrics, the first international periodical specialized on bibliometric topics, started in 1979. The fact that bibliometric methods are already applied to the bibliometric field itself also indicates the rapid growth of the discipline.

Citations are signposts left behind after information has been utilized.while Cronin [12] defined citations as:

frozen footprints in the landscape of scholarly achievement which bear witness of the passage of ideas.However, problems with citation analysis as a reliable instrument of measurement and evaluation have been acknowledged. Citations reflect both the needs and idiosyncrasies of the citer, including such factors as utility, quality, availability, advertising (self-citations), collaboration or comradeship (in-house citations), chauvinism, mentoring, personal sympathies and antipathies, competition, neglect, obliteration by incorporation, augmentation, flattery, convention, reference copying, reviewing, and secondary referencing [13]. As Seglen says ([14], page 636), "while the sheer number of factors may help to achieve some statistical balance, we all know of scientists who are cited either much less (ourselves) or much more than they deserve on the basis of their scientific achievements". Nevertheless, citation analysis has demonstrated its reliability and usefulness as a tool for ranking and evaluation scholars and their publications [15]. Furthermore, the robustness of citations as a method to evaluate impact is particularly witnessed by the adoption of a similar approach in several other fields far different from bibliometrics, including web pages connected by hyperlinks [16,17], patents and corresponding citations [18], published opinions of judges and their citations within and across opinion circuits [19], and even sections of the Bible and the biblical citations they receive in religious texts [20].

It is not wise to force the assessment of researchers or of research groups into just one measure, because it reinforces the opinion that scientific performance can be expressed simply by one note. Several indicators are necessary in order to illuminate different aspects of performance.Moreover, Glänzel [22] adds:

the use of a single index crashes the multidimensional space of bibliometrics into one single dimension.Two potential dangers of condensing down quality of research to a single metric are:

|

$\displaystyle{h_{i,j} = \frac{c_{i,j}}{\sum_{j} c_{i,j}}}$for all non-dangling $i$ and all $j$. Furthermore, $H$ is mapped to a matrix $\hat{H}$ in which all rows corresponding to dangling nodes are replaced with the article vector $a$. Notice that $\hat{H}$ is row-stochastic, that is all rows are non-negative and sum to 1. A new row-stochastic matrix $P$ is defined as follows:

$\displaystyle{P = \alpha \hat{H} + (1- \alpha) A}$where $A$ is the matrix with identical rows each equal to the article vector $a$, and $\alpha$ is a free parameter of the algorithm, usually set to $0.85$. Let $\pi$ be the left eigenvector of $P$ associated with the unity eigenvalue, that is, the vector $\pi$ such that $\pi = \pi P$. It is possible to prove that this vector exists and is unique. The vector $\pi$, called the influence vector, contains the scores used to weight citations allocated in matrix $H$. Finally, the Eigenfactor vector $r$ is computed as

$\displaystyle{r = 100 \cdot \frac{\pi H}{\sum_{i} [\pi H]_i}}$That is, the Eigenfactor score of a journal is the sum of normalized citations received from other journals weighted by the Eigenfactor scores of the citing journals. The Eigenfactor scores are normalized such that they sum to 100. The Eigenfactor metric has a solid mathematical background and an intuitive stochastic interpretation. The modified citation matrix P is row-stochastic and can be interpreted as the transition matrix of a Markov chain on a finite set of states (journals). Hence, the influence vector $\pi$ corresponds to the stationary distribution of the associated Markov chain. Since $P$ is a primitive matrix, the Markov theorem applies, hence $\pi$ is the \textit{unique} stationary distribution and, moreover, the influence weight $\pi_j$ of the $j$th journal is the limit probability of being in state $j$ when the number of transition steps of the chain tends to infinity. Moreover, the Perron theorem for primitive matrices ensures that $\pi$ is a strictly positive vector corresponding to the leading eigenvector of $P$, that is, the eigenvector associated with the largest eigenvalue - which is 1 because $P$ is stochastic. The described stochastic Markov process has an intuitive interpretation in terms of random walks on the citation network [41]. Imagine a researcher that moves from journal to journal by following chains of citations. The researcher selects a journal article at random and reads it. Then, he retrieves at random one of the citations in the article and proceeds to the cited journal. Hence, the researcher chooses at random an article from the reached journal and goes on like this. Eventually, the researcher gets bored of following citations, and selects a random journal in proportion to the number of article published by each journal. With this model of research, by virtue of the Ergodic theorem for Markov chains, the influence weight of a journal corresponds to the relative frequency with which the random researcher visits the journal. The Eigenfactor score is a size-dependent measure of the total influence of a journal, rather than a measure of influence per article, like the impact factor. To make the Eigenfactor scores size-independent and comparable to impact factors, we need to divide the journal influence by the number of articles published in the journal. In fact, this measure, called Article Influence™, is available both at the Eigenfactor web site and at Thomson-Reuters's JCR.

| ref | compared sources | field |

| [47] | CiteSeer and Web of Science | computer science |

| [48] | CiteSeer and Web of Science | computer science |

| [49] | Chemical Abstracts and Web of Science | chemistry |

| [50] | Web of Science, Scopus, and Google Scholar | JASIST papers |

| [51] | Web of Science, Scopus, and Google Scholar | Current Science papers and Eugene Garfield papers |

| [52] | Web of Science and Google Scholar | webometrics |

| [53] | Web of Science and Google Scholar | different disciplines |

| [54] | Web of Science, Scopus, and Google Scholar | oncology and condensed matter physics |

| [55] | Web of Science and Google Scholar | different disciplines |

| [56] | Web of Science and Google Scholar | business sciences |

| [57] | CSA Illumina, Web of Science, Scopus, and Google Scholar | social sciences |

| [58] | Web of Science, Scopus, and Google Scholar | different disciplines |

| [59] | Web of Science, Scopus, and Google Scholar | library and information science |

| [60] | Web of Science and Google Scholar | different disciplines |

| [61] | Web of Science and Google Scholar | information science |

| [62] | Web of Science, Scopus and Google Scholar | human-computer interaction |

| [63] | Web of Science, Scopus, and Google Scholar | different disciplines |

| [64] | Web of Science, Scopus and Google Scholar | library and information science and information retrieval |

| [65] | Web of Science, Scopus, Google Scholar, and Chemical Abstracts | chemistry |

$\displaystyle{f(x) = \frac{\alpha}{x^{\alpha + 1}}}$The cumulative distribution function is:

$\displaystyle{F(x) = 1 - \frac{1}{x^{\alpha}} }$The mean is $\alpha / (\alpha -1)$ for $\alpha > 1$, and infinite otherwise. The median is $2^{1/\alpha}$ and the mode is $1$. Notice that the mean is greater than the median which is greater than the mode and the limit for $\alpha \rightarrow \infty$ of both the mean and the median is the mode $1$. Skewness is

$\displaystyle{\gamma = \frac{2 (1 + \alpha)}{\alpha - 3} \sqrt{\frac{\alpha - 2}{\alpha}}}$for $\alpha > 3$, and kurtosis is

$\displaystyle{\kappa = \frac{6(\alpha^3 + \alpha^3 - 6 \alpha - 2)}{\alpha (\alpha-3) (\alpha-4)}}$for $\alpha > 4$. Both skewness and kurtosis are greater than zero and tend to 2 and 6, respectively, as $\alpha \rightarrow \infty$. The raw moments are found to be $E(X^n) = \alpha / (\alpha - n)$ for $\alpha > n$.

$\displaystyle{f(x) = \alpha \lambda^\alpha x^{\alpha -1} e^{-(\lambda x)^\alpha}}$where $x \geq 0$, $\lambda > 0$ and $0 < \alpha \leq 1$. In particular, if the stretching parameter $\alpha = 1$, then the distribution is the usual exponential distribution. When the parameter $\alpha$ is not bounded from $1$, the resulting distribution is better known as the Weibull distribution. The cumulative distribution function is:

$\displaystyle{F(x) = 1 - e^{-(\lambda x)^\alpha}}$It can be shown that the $n$th raw moment $E(X^n)$ is $\frac{1}{\lambda^n} \Gamma(\frac{\alpha + n}{\alpha})$, where $\Gamma(x)$ is the Gamma function, an extension of the factorial function to real and complex numbers, defined by:

$\displaystyle{\Gamma(x) = \int_{0}^{\infty} t^{x-1} e^{-t} dt}$In particular, it holds that $\Gamma(1) = 1$ and $\Gamma(x+1) = x \Gamma(x)$. Hence, for a positive integer $n$, we have $\Gamma(n+1) = n!$. Notice that, if $\alpha = 1$, then the raw moments are given by $n! / \lambda^n$ and they correspond to the raw moments of the exponential distribution. In particular, the mean $E(X)$ is the first raw moment that is equal to $\frac{1}{\lambda} \Gamma(\frac{\alpha + 1}{\alpha})$. The median is given by $\frac{1}{\lambda} \log^{1/\alpha} 2$ and the mode is 0. Notice again that the mean is greater than the median that is greater than the mode.

$\displaystyle{f(x) = \frac{1}{x \sigma \sqrt{2 \pi}} e^{-\frac{(\log(x)-\mu)^2}{2 \sigma^2}}}$for $x > 0$. The parameters $\mu$ and $\sigma$ are the mean and standard deviation of the variable's natural logarithm. The cumulative distribution function has no closed-form expression and is defined in terms of the density function as for the normal distribution. The mean is $e^{\mu + \sigma^2 / 2}$, the median is $e^{\mu}$ and the mode is $e^{\mu - \sigma^2}$. Notice that the mean is greater than the median which is greater than the mode. This suggests a positive asymmetry of the distribution. Indeed, skewness is $(e^{\sigma^2} - 1) \sqrt{e^{\sigma^2} - 1} > 0$. Moreover, excess kurtosis is $e^{4 \sigma^2} + 2 e^{3 \sigma^2} + 3 e^{2 \sigma^2} - 6 > 0$. The raw moments are given by $E(X^n) = e^{n \mu + n^2 \sigma^2 / 2}$. It is interesting to observe that all the above distributions that are commonly used to model bibliometric phenomena are positively (right) skewed. A distribution is symmetric if the values are equally distributed around a typical figure (the mean); a well-known example is the normal (Gaussian) distribution. A distribution is right-skewed if it contains many low values and a relatively few high values. It is left-skewed if it comprises many high values and a relatively few low values. As a rule of thumb, when the mean is larger than the median the distribution is right-skewed and when the median dominates the mean the distribution is left-skewed. A more precise numerical indicator of distribution skewness is the third standardized central moment, that is

$\displaystyle{\gamma = \frac{E[(X - \mu)^3]}{\sigma^3}}$where $\mu$ and $\sigma$ are mean and standard deviation of distribution of random variable $X$, respectively. A value close to 0 indicates symmetry; a value greater than 0 corresponds to right skewness, and a value lower than 0 means left skewness. The observed right skewness might be considered as an application of the more general Pareto Principle (also known as 80-20 rule) [7]. The principle states that:

Most (usually 80%) of the effects comes from few (usually 20%) of the causes.It has been suggested that the irreducible skewness of distributions of scholar productivity and article citedness may be explained by sociological reinforcement mechanisms such as the Principle of Cumulative Advantage. De Solla Price formulated this in 1976 as follows [8]:

Success seems to breed success. A paper which has been cited many times is more likely to be cited again than one which has been little cited. An author of many papers is more likely to publish again than one who has been less prolific. A journal which has been frequently consulted for some purpose is more likely to be turned to again than one of previously infrequent use.

|

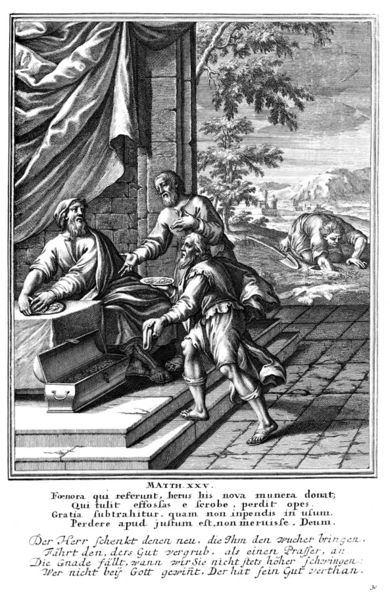

The Mathew Effect consists in the accruing of greater increments of recognition for particular scientific contributions to scientists of considerable repute and the withholding of such recognition from scientists who have not yet made their mark.It takes the name from the following line in Jesus' parable of the talents in the biblical Gospel of Mathew: For unto every one that hath shall be given, and he shall have abundance: but from him that hath not shall be taken away even that which he hath. Interestingly, Seglen claims that skewness is an intrinsic characteristic of distributions related to extreme types of human efforts; although scientific ability may be normally distributed in the general population, scientists are likely to form an extreme-property distribution to their speciality be in terms of citedness or in terms of productivity. This statistical pattern is expected for different types of highly specialized human activity, a parallel being found in the distribution of performance by top athletes [14].

$\displaystyle{r_{i,j} = \frac{\sum_k c_{i,k} \cdot c_{j,k}}{\sqrt{c_i \cdot c_j}}}$This is the ratio of the number of references shared by publications $p_i$ and $p_j$ and the geometric mean of the number of references of the two papers concerned. Notice that $0 \leq r_{i,j} \leq 1$, and $r_{i,j} = 0$ when publications $p_i$ and $p_j$ share no references, while $r_{i,j} = 1$ when publications $p_i$ and $p_j$ have the same bibliography. Geometrically, $r_{i,j}$ is the cosine of the angle formed by the $i$th and $j$th rows of the citation matrix, which is $0$ when the two vectors are orthogonal, and is $1$ when they are parallel. In matrix notation, let $A = (a_{i,j}) = C C^T$, that is, $a_{i,j}$ is the number of references shared by $i$th and $j$th publications, and, in particular, $a_{i,i} = c_i$ is the number of references of $p_i$. Let $D$ be the diagonal matrix such that the $i$th diagonal entry is $1 / \sqrt{a_{i,i}}$. Then we have that $R = (r_{i,j})$ is defined as $R = D A D$ On the other hand, a measure of co-citation coupling between publications $p_i$ and $p_j$ is:

$\displaystyle{s_{i,j} = \frac{\sum_k c_{k,i} \cdot c_{k,j}}{\sqrt{c^i \cdot c^j}}}$This is the ratio of the number of articles that cite both publications $p_i$ and $p_j$ and the geometric mean of the number of citations received by the two publications involved. This is also the cosine of the angle formed by the $i$th and $j$th columns of the citation matrix. Again, $0 \leq s_{i,j} \leq 1$, and $s_{i,j} = 0$ when publications $p_i$ and $p_j$ are never co-cited, while $s_{i,j} = 1$ when publications $p_i$ and $p_j$ are always cited together. In matrix notation, let $B = (b_{i,j}) = C^T C$, that is, $b_{i,j}$ is the number of articles that co-cited $i$th and $j$th publications, and, in particular, $b_{i,i} = c^i$ is the number of citations gathered by $p_i$. Let $D'$ be the diagonal matrix such that the $i$th diagonal entry is $1 / \sqrt{b_{i,i}}$. Then we have that $S = (s_{i,j})$ is defined as $S = D' B D'$. It is worth noticing that the similarity formulas used in citation coupling closely resemble Pearson correlation coefficient formula for two statistical samples $x$ and $y$, that is:

$\displaystyle{r_{xy} = \frac{\sigma_{xy}}{\sigma_x \cdot \sigma_y} = \frac{\sum_{k} (x_{k} - \mu_x) \cdot (y_{k} - \mu_y)}{\sqrt{\sum_{k} (x_{k} - \mu_x)^2} \cdot \sqrt{\sum_{k} (y_{k} - \mu_y)^2}}}$In particular, when the means of both statistical samples $x$ and $y$ are null, the Pearson correlation coefficient is exactly the cosine of the angle formed by the two sample vectors and the two measures coincide. Once the similarity strength between bibliometric units has been established, bibliometric units are typically represented as graph nodes and the similarity relationship between two units is represented as a weighted edge connecting the units, where weights stand for the similarity intensity. Such visualizations are called bibliometric maps. Such maps are powerful but they are often highly complex. It therefore is helpful to abstract the network into inter-connected modules of nodes. Good abstractions both simplify and highlight the underlying structure and the relationships that they depict. When the units are publications or concepts, the identified modules represent in most cases recognizable research fields. In the rest of this section, we describe three methods for creating these abstractions: clustering, principal component analysis, and information-theoretic abstractions.

$\displaystyle{\frac{\lambda_1 + \lambda_2 + \ldots + \lambda_m}{\lambda_1 + \lambda_2 + \ldots + \lambda_n} \geq \alpha}$where $0 < \alpha \leq 1$ is a threshold (often fixed at $0.8$).

"Measuring is knowing" - Heike Kamerlingh Onnes

"Not everything that can be counted counts, and not everything that counts can be counted" - Albert Einstein

"If scientometrics is a mirror of science in action, then scientometricians' particular responsibility is to both polish the mirror and warn against optical illusions" - Michel Zitt

"No amount of fancy statistical footwork will overcome basic inadequacies in either the appropriateness or the integrity of the data collected" - Goldstein and Spiegelhalter

"We think of statistics as facts that we discover, not numbers we create" - Joel Best

"Citations are frozen footprints in the landscape of scholarly achievement which bear witness of the passage of ideas" - Blaise Cronin

"The use of a single index crashes the multidimensional space of bibliometrics into one single dimension" - Wolfgang Glänzel

"For unto every one that hath shall be given, and he shall have abundance: but from him that hath not shall be taken away even that which he hath" - Jesus of Nazareth